Outside of some unobtainable perfect state, before you’ve even pressed the button to begin training your model you have already hindered its accuracy in some form. The reason for this is simple, your model is based entirely on the selection and composition of your training image set.

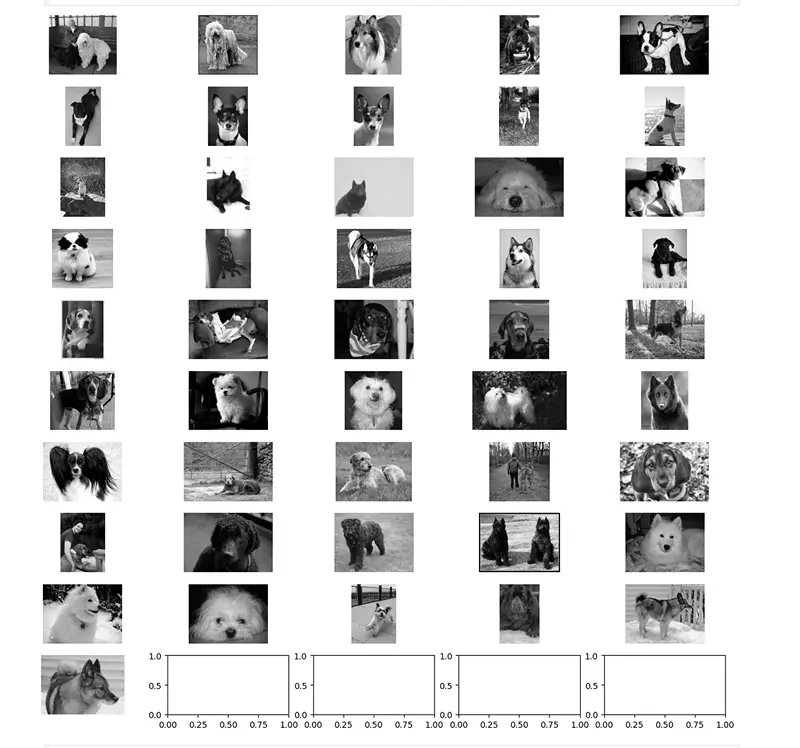

Take for example the Stanford Dogs dataset, a famous one used by lots of researchers to test quality of models given the difficulty of discerning specific breed markers. You can see from their number of training images per class it’s an unbalanced dataset to begin with. That’s not the half of it though.

The real problem with this dataset begins to appear when you examine some of the random images that comprise it.

Would you believe these images were part of the set?

A Christmas color pop?

You see the issue with training a model on such things is you’re training the model specifically to look for data that doesn’t exist in the real world.

This in turn leads to further questions though.

If you were to benchmark a model based on this dataset’s accuracy with it what are you optimizing for exactly? For identifying Christmas color pop themed dogs in the real world?

Each of those images drops its color channels for some pixels in favor of a styled output. By benchmarking two models on this, assuming they are close in results, you may mistakenly be favoring a model that is not properly reflective of real life scenarios.

Let’s take this example to the extreme: if you were to provide only color popped images and trained two models based on them and then used their results on real dog images what properties of the model are you optimizing for? You’re optimizing for models that ignore color data or give less weight in given scenarios. Even if you had a model that returned 90% accuracy in that given state it does not reflect that the model you’ve created has a better architecture just that it solves your particular (and may I add artificial) problem better perhaps by giving less weight to the color than the other model does.

What’s interesting to me is this data supposedly came from ImageNet. Is ImageNet full of both black and white and color pop images then? Why would researchers releasing this dogs subset not take the time to investigate the underlying images?

I started with a simple check for grayscale images:

This was generated by this notebook.

However, I noticed those color pops when further reviewing for other issues so opted to enhance with the help of a stackoverflow post on the subject:

def is_grayscale(img):

image_pil = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

stat = ImageStat.Stat(image_pil)

if sum(stat.sum)/3 == stat.sum[0]: # Check the average with any element value

return True # If grayscale

# https://stackoverflow.com/questions/68352065/how-to-check-whether-a-jpeg-image-is-color-or-grayscale-using-only-python-pil

### splitting b,g,r channels

b,g,r=cv2.split(img)

### getting differences between (b,g), (r,g), (b,r) channel pixels

r_g=np.count_nonzero(abs(r-g))

r_b=np.count_nonzero(abs(r-b))

g_b=np.count_nonzero(abs(g-b))

### sum of differences

diff_sum=float(r_g+r_b+g_b)

### finding ratio of diff_sum with respect to size of image

ratio=diff_sum/img.size

return ratio<=0.35

This script isn’t exact and sometimes pulls in false positives but for the Stanford Dogs dataset it’s clear it finds quite a few bad images.

In addition I found this interesting post on stackoverflow related to identifying sepia images.

At its core to have an accurate model you must first:

- Identify the target you wish to model

- Collect datapoints that represent the model in various states that are important to you

- Ensure that your production consumer of the model represents the same perspective with similar settings

- Balance your dataset to avoid favoring a given output

That is it’s perfectly fine to train a dog model on Christmas color pops if you’re consistent with it, you balance the data accordingly, and the end hardware is setup to perform a Christmas color pop pass on the image prior to inferencing. If you don’t plan on doing that, why would you ever include those images in your set? They will fail on validation, they will corrupt your model with their invalid perspectives of the world, and you’ll never reach the same level of accuracy as you would have had you pruned it.

If others know of a good open source tool to use for inspecting datasets I’d love to hear of one. Perhaps something for a project for another day for myself if there isn’t something easily available.

I can image a useful tool that could filter a folder contents based on:

- Sepia

- Black and white

- Color pop

- ImageNet outputs (specify class, find item)

- Custom model outputs

If something like that existed one could more easily prune bad data by finding it effectively.

For now though until you or I find such tools for inspection we must stay vigilent and check our data thoroughly especially data collected by third parties. Some preparation now ultimately avoids issues down the line.